What is Artificial Intelligence (AI)?

The History of Artificial Intelligence:

Artificial intelligence, commonly known as AI, has become one of the most common “buzzwords” of the new millennium. Although it is so frequently used in conversation, news, and technology, many still don’t know what it is exactly. The goal for this blog post is to inform the audience about how AI works, the different types of AI—as well as the major subgroups—that exist today, and why AI technology matters to Segmed.

Artificial intelligence was first coined in 1956 by John McCarthy when he held the Dartmouth Summer Research Project on Artificial Intelligence, a conference at Dartmouth College spanning the entire summer. This colloquium was a workshop filled with scientists who came to the conclusion that “every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it,” thus prompting the emergence of the field of AI. They also decided that the key factors for an intelligent machine are learning, natural language processing, and creativity.

Little did they know at the time that their thoughts on AI would remain the predominant thinking of the field for decades, and they would go on to change the future of technology.

AI, Defined:

Now, let’s go back to the AI basics, as they are oftentimes overlooked. Although artificial intelligence has become so vast in terms of usage and meaning, it is difficult to find an all-encompassing definition of the word. In fact, there have been dozens of published papers with the sole purpose being to define AI. One such famous article, written by Bulgarian mathematician Dimiter Dobrev in 2004 defines artificial intelligence as “a program which in an arbitrary world will cope not worse than a human.”

Another famous definition—established by Alan Turing—defined intelligent behavior as having “the ability to achieve human-level performance in all cognitive tasks, sufficient to fool an interrogator.” Turing’s definition of intelligent systems was created in 1950, even before the Dartmouth Summer Research Project on Artificial Intelligence.

Although Turing’s definition was created before the term artificial intelligence was coined, it is still highly regarded due to its preciseness in setting the expectations and qualifications of an intelligent system. The two definitions differ in that Turing believed that intellect is gained over time, whereas Dobrev believed that knowledge is separate from intelligence—suggesting that even newborns are intelligent from birth. How intelligence is defined is going to be vastly different, as seen with the contrasting ways of thinking between Turing and Dobrev. However, regardless of the way intelligence is defined, there is a common thread among all of the definitions of artificial intelligence. This underlying similarity is that AI is a non-human machine or system that is capable of intelligence.

The Different Types of AI:

Artificial intelligence is generally broken up into three subgroups: artificial narrow intelligence (ANI), artificial general intelligence (AGI), and artificial superintelligence (ASI). ANI is considered weak AI whereas the latter two—AGI and ASI—are considered strong. Typically, ANI is considered to have lower intelligence than that of a human, AGI equal to that of a human, and ASI surpassing the intelligence of a human.

When we think of the AI in our everyday lives (Siri, fraud detection, Deep Blue, diagnostic pathology, chatbots, etc.), they are all forms of ANI. As stated in an article from The Verge, “We’ve only seen the tip of the algorithmic iceberg.” There is so much more that needs to be accomplished in the field before we even reach AGI, let alone ASI. However, it is predicted that we may reach artificial general intelligence in the year 2060. This would be what is often referred to as ‘singularity.’

Now that we have defined artificial intelligence in more comprehensible terms and understand the three different subgroups, let’s delve into how ANI (or the AI we see in our world today) can achieve its intelligence. Artificial intelligence has different ways of solving problems in an attempt to mimic the intelligence we expect of such highly complex machines.

These typically fall into a handful of categories: machine learning (ML), deep learning (DL), natural language processing (NLP), automatic speech recognition (ASR), computer vision, and expert systems. Let’s take a closer look at each of these different subsections of AI.

1. Machine Learning & Deep Learning:

Machine learning—oftentimes the AI technology most frequently spoken about—“uses statistics to find patterns in massive amounts of data.” These patterns can then be applied to almost anything. Segmed’s customers who are developing medical AI algorithms need massive amounts of labeled medical data. Thanks to machine learning, these algorithms can find patterns in the given data and then recognize the same patterns in unlabeled medical data.

Within ML, there are many subtypes of machine learning. One of which is something called deep learning (DL). Deep learning is a kind of machine learning that is even more powerful when it comes to finding patterns. Unlike ML, DL uses neural networks—similar to how the neurons in your brain work together—to find even smaller and more difficult to identify patterns.

Other types of machine learning algorithms are supervised learning and unsupervised learning, although these still do not comprise all of the ML algorithms. Supervised learning is a type of ML used when the desired outcome for a given data set is known. It works by having a data set that is already labeled by humans. Then, when new data is fed to the algorithm, unlabeled data can be classified based on the previously labeled data.

When data is more complex and difficult to categorize by humans, unsupervised learning is a better approach. This could also be used when the outcome of the data set is unknown or the data is unlabeled. Through unsupervised learning, the algorithm can look for patterns and similarities among the data.

Once the algorithm separates the data into different classifications, humans have to determine the specific patterns or commonalities among each group because the algorithm cannot express its rationale for the categorization.

- Natural Language Processing (NLP) & Automatic Speech Recognition (ASR): Similar to ML, natural language processing analyzes and identifies patterns. Unlike machine learning, NLP focuses specifically on written and spoken data. Computers can process this type of data by identifying keywords and patterns. NLP is unique in that it helps computers understand the context of the textual information. This type of AI is what allows for assistants (Think: Siri and Alexa) and chatbots to understand the context of your spoken or written language, allowing them to distinguish the context of homophones. NLP often works conjointly with automatic speech recognition. ASR is what converts spoken words into text. ASR works by identifying patterns in audio speech waves, converting them to sounds in a language, and finally to words. When using spoken data, it is essentially the first step in driving NLP.

- Computer Vision: Computer vision is extremely similar to NLP, but—instead of text—is applied to images. Computers view images by looking at each pixel, and they typically cannot classify individual elements of an image. However, computer vision is what helps computers take in and process images to determine the classification and context of an image. Similarly, with ML, computer vision accomplishes this when large sets of image databases are assembled, and the individual images are all annotated. Currently, one of the most popular uses for computer vision is with facial recognition technology.

- Expert Systems: Two main parts make up an expert system: the knowledge base (a collection of facts that will be applied to the expert system) and the inference engine (which interprets and evaluates these facts in the knowledge base to find an answer or solution to a problem). The facts in a knowledge base have to be acquired and inputted by humans, and then the inference engine can interpret this information. The knowledge base can be viewed as something similar to a training data set. The expert system sometimes displays the path it took to arrive at its conclusion so the human can retrace the AI steps. This technology is incredibly helpful to humans in a wide range of areas such as chemical analysis, financial planning, and medical diagnosis. In fact, expert systems is the most common type of AI in the field of healthcare. However, this technology should not be used as an exclusive decision-maker in any field, rather it should be used in conjunction with humans.

Why This Matters at Segmed:

The sectors explained above are just a few of the many types of AI. Each has its advantages and disadvantages, and choosing one over the other depends on the given application of the AI. Furthermore, a lot overlaps between these different subgroups.

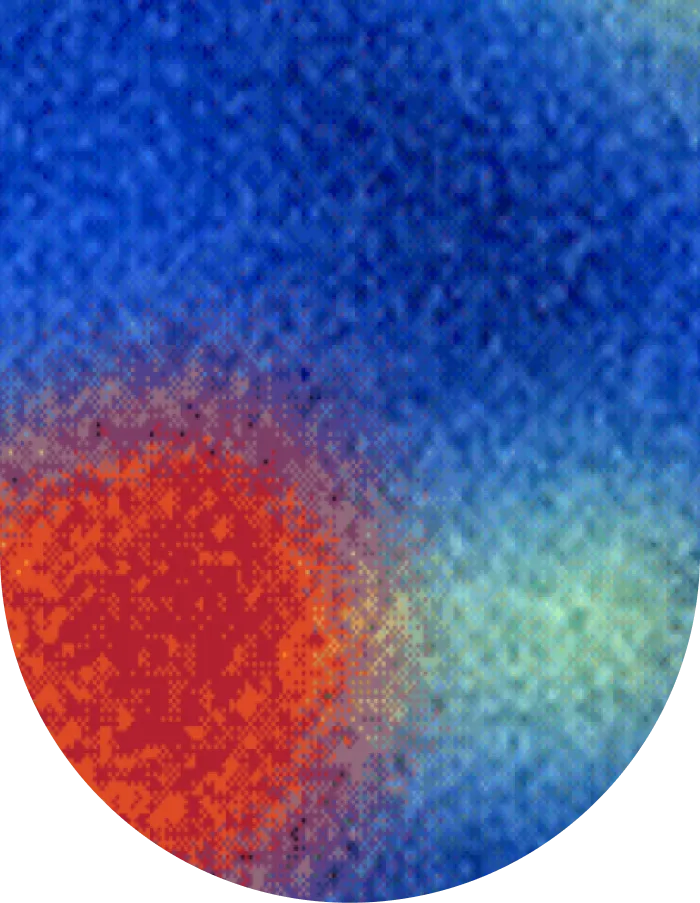

Above, Figure 1 gives a visual representation of their connections and distinctions. While there are myriad uses for artificial intelligence today, it is only just the beginning. As technology advances, so will the possibilities.

That’s why Segmed uses many different types of AI technology, seizing every opportunity that AI has to offer. We serve companies developing expert systems that will be deployed in medicine. To do that, we are developing some of our own algorithms including NLP, computer vision, and expert systems to best understand each dataset that we are putting together.

Through the use of these algorithms, Segmed and our customers will be able to take part in the AI healthcare revolution.